Following on from part one of my last blog – ‘what is bias and how do we avoid it?’ – there is also societal bias, and this can be a doozy!

Recently there has been a big movement in art being created by AI from text. Some of it is exceptionally good and the power of these systems is frankly astonishing.

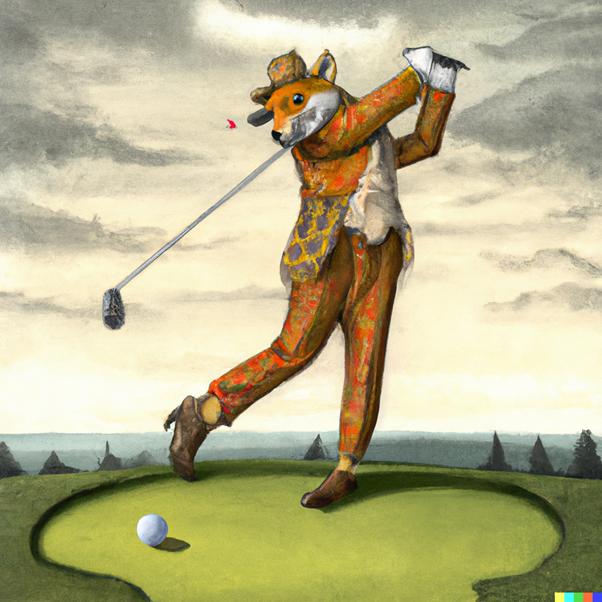

For example I asked for ‘a fox in a tweed suit playing golf on the moon in the style of a Renaissance painting’. Now, that’s quite a specific request but OpenAI’s DALL-E 2 was able to produce this:

I mean, you can argue that the tweed is a bit off, but I think that only proves the point!

We’re clearly going to see more from these systems which are now able to run on reasonably standard home PCs!

These systems are extremely complex, they are not simply looking up millions of indexed pictures, these are unique creations, conjured and created by the AI specifically for the request you make.

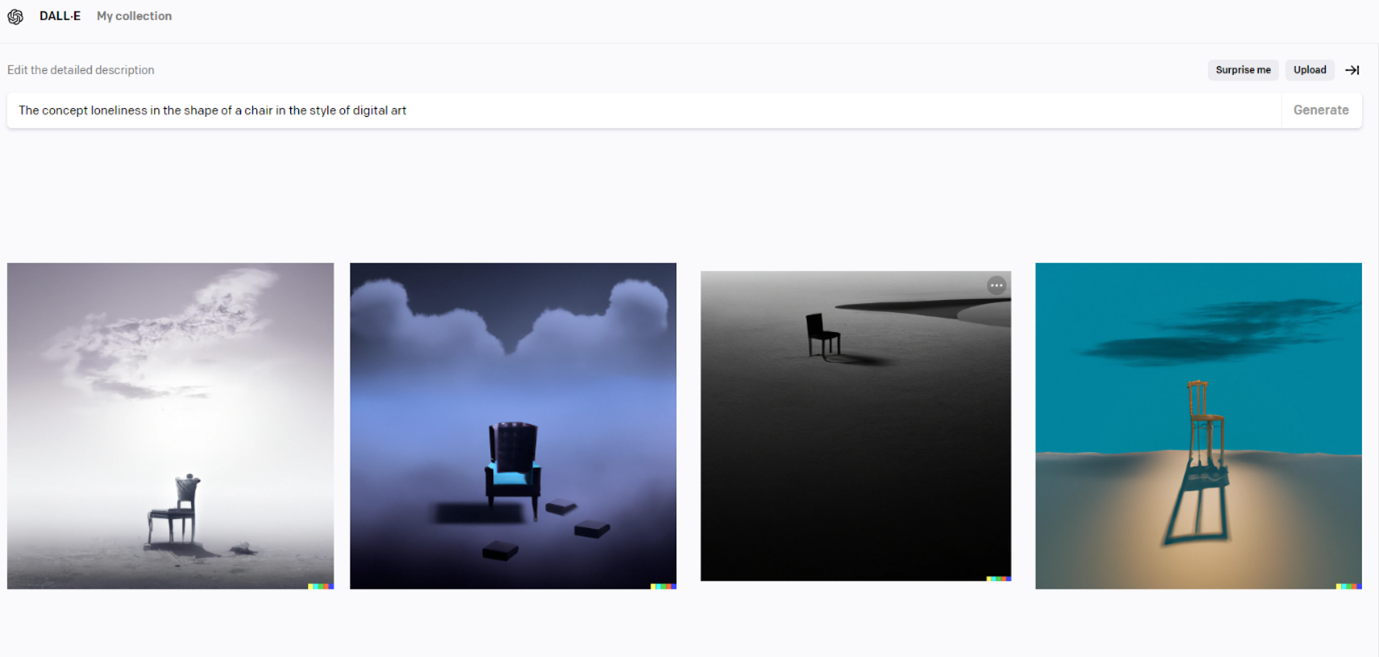

I wanted to see if it could understand abstract concepts as well so I asked for ‘The concept of loneliness in the shape of a chair in the style of digital art’. This is what it produced:

This AI is trained on many millions of images from the internet. Leaving aside the copyright issues of the purloined labour of those artists (an ethical issue in its own right)… what can we learn regarding bias in this system?

I decided to ask it to draw various nationalities socialising. The idea is that it’s a simple task focusing on a standard activity and we can compare the variances between nationalities as a result.

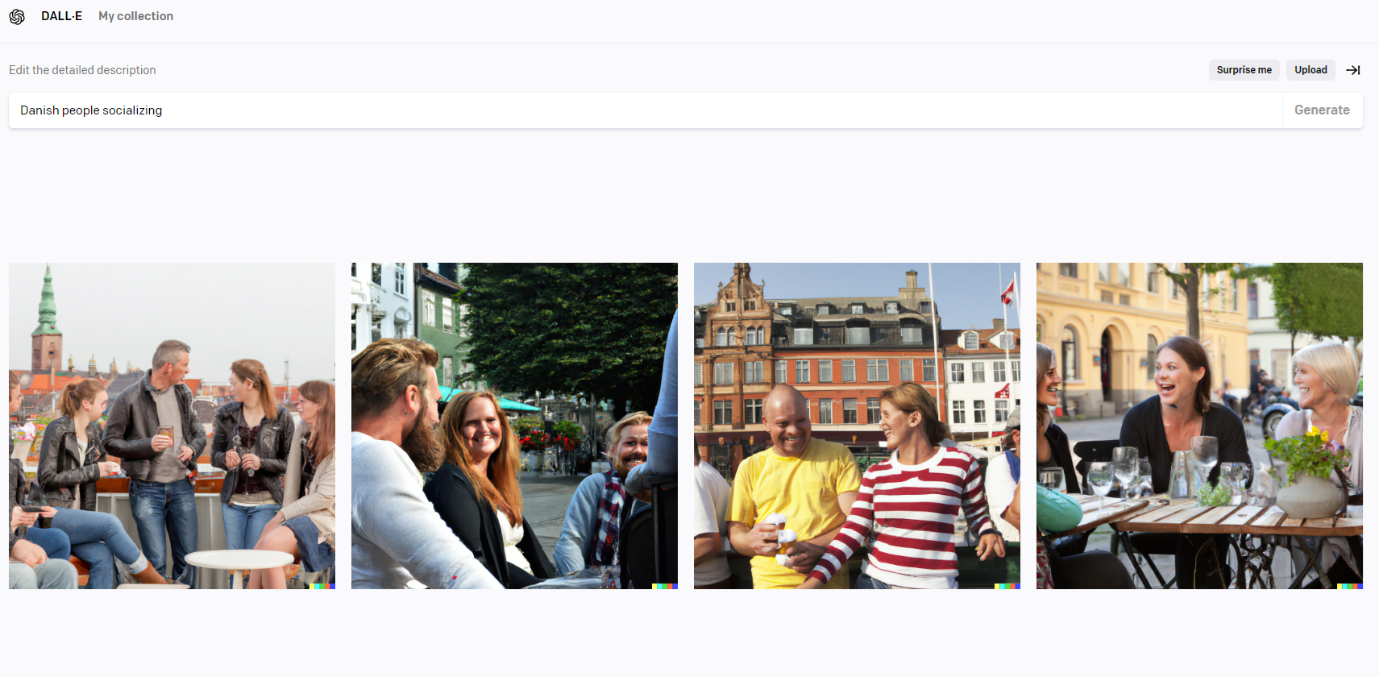

First up, I decided to start with Danish people. What does Dall-E think Danish people socialising looks like?

Now, firstly it must be explained that this version of Dall-E is intentionally designed to mess up faces to avoid creating believable “deep fakes” (fake photos of people). Faces aside, the picture above does a reasonable job of portraying Danish people socialising.

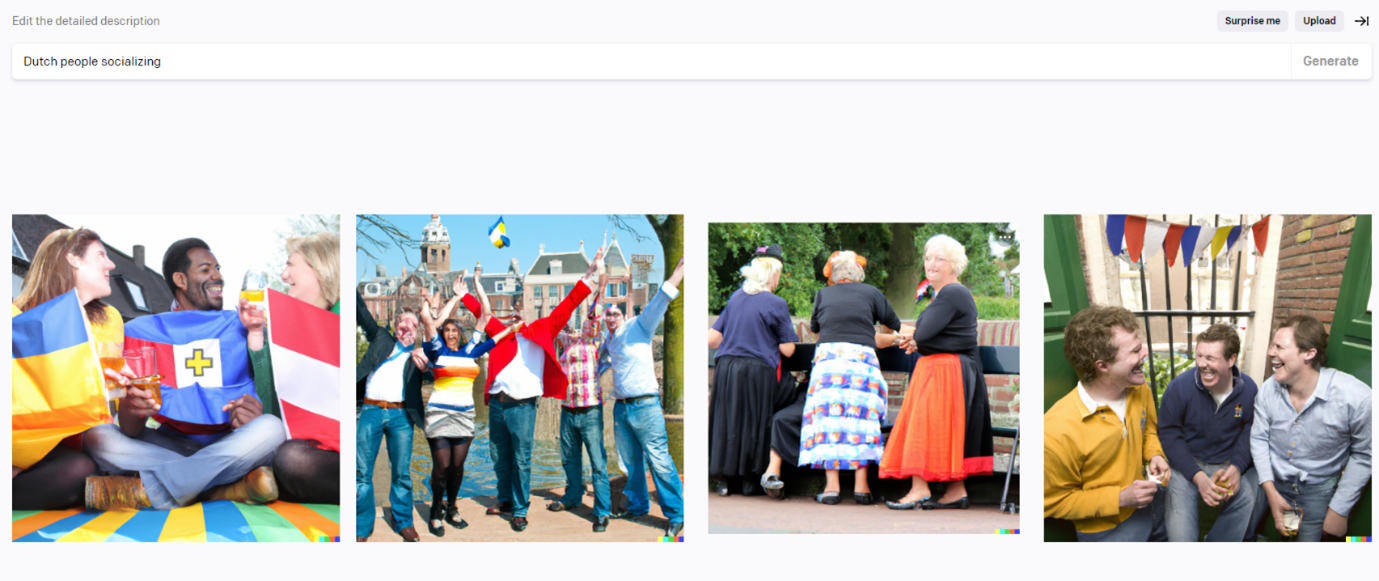

Next, I asked for Dutch people socialising. We have an office in Amsterdam so I thought it might amuse the staff here.

This… is not so good but ok, apart from the lack of heads, the weird flags and some decidedly traditional dresses, I guess it’s ok. Ish.

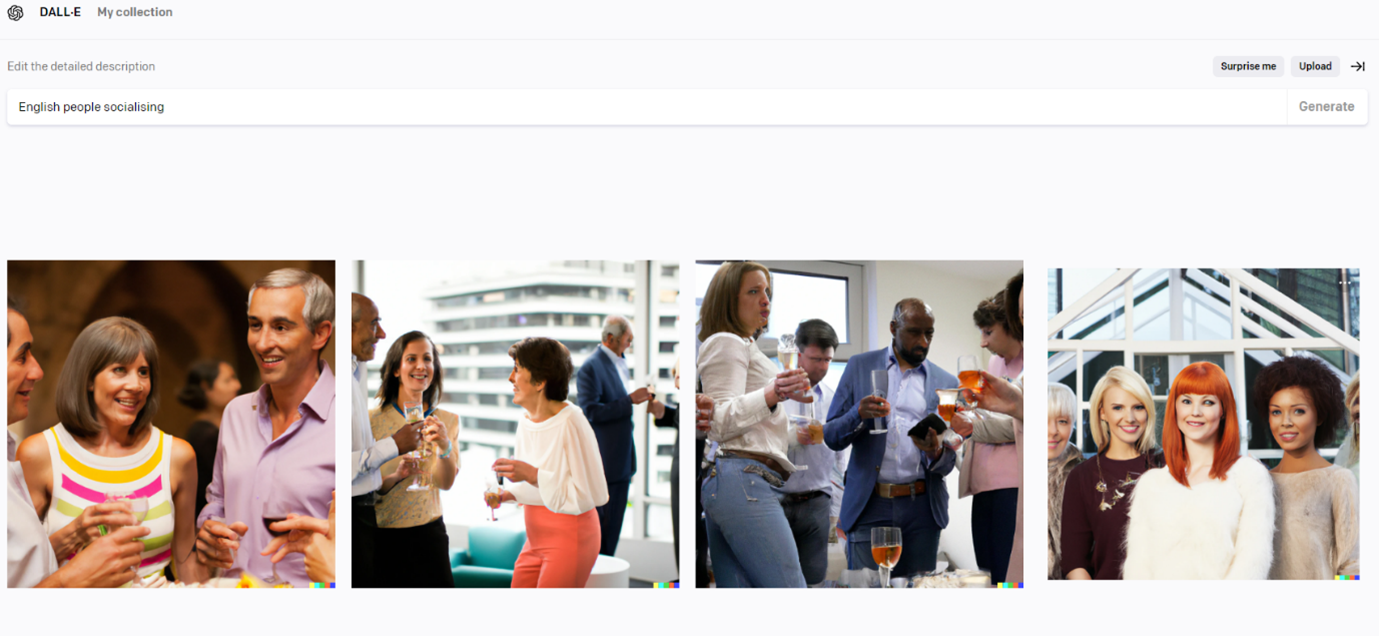

We also have a UK office, so what about English people socialising?

This looks a lot better, modern people seemingly having a good time. Reasonable distribution of races and genders too.

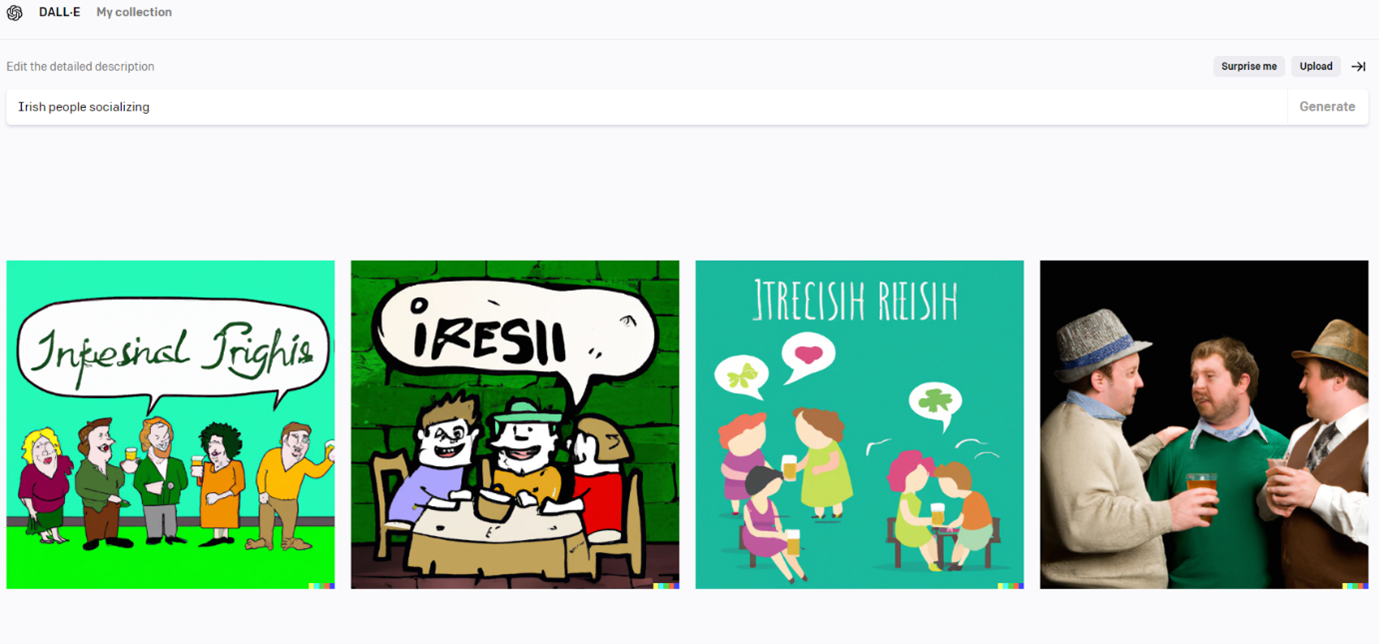

So then because I’m Irish… I asked for … Oh. Oh dear.

Wow.

Where to start… Perhaps with the fact that none of those words spell Irish (not in any language I know). Or that almost everyone is drinking booze.

But one more subtle thing is that in all the other pictures, the images produced were of real people. Admittedly some of the Dutch were headless real people but nonetheless, they were depicted as genuine humans.

Three out of four pictures here are childish cartoons.

The Irish, to this AI, are drunken cartoon people.

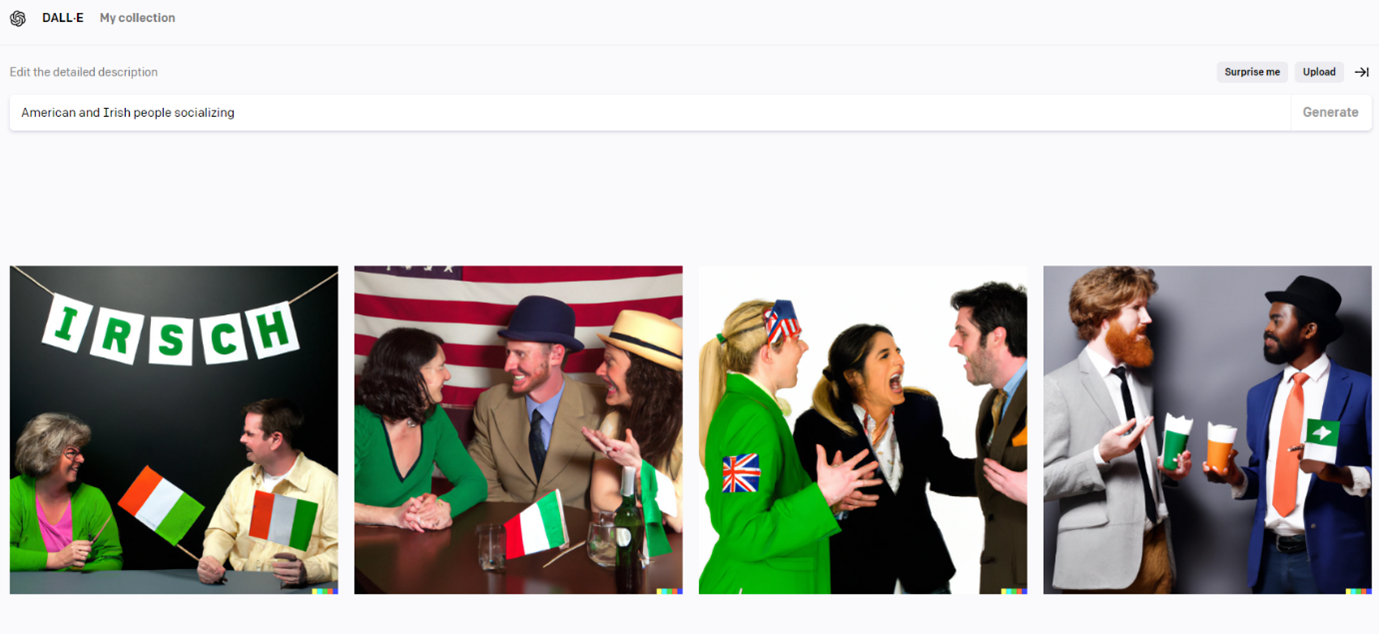

Let’s try Irish socialising with another nationality, perhaps we can force it to treat us as real people.

“American and Irish people socialising.”

Things to note here. That’s still not how you spell Irish!

Not even one of these flags is the actual Irish flag (and why one lady is plastered with the Union Jack, who knows).

The less said about the second and fourth pictures, the better really…

So what’s going on here? Does the AI really think Irish people are cartoonish drunk red beards? Obviously not, it has never met an Irish person. It’s been exposed to a vast number of images from the internet and that is its only source of information about Irish people. It “thinks” this way because that’s what the internet thinks of Irish people. Try it yourself, go to Google, type in “Irish” and hit images. It’s not pretty! Lots of drunken leprechauns!

The point here for this article is that AI follows the golden rule of computers – garbage in, garbage out. The world is already societally biased to think of Irish people as cartoonishly drunk leprechauns. Why should we expect an AI whose only experience is garnered through those images, to think otherwise?

The problem here goes far beyond some hurt national sensibilities. It’s pretty clear that these AI will generate a great deal of art in the coming decades. If they have these biases now and continue to be trained on internet imagery, the imagery they are producing then becomes reinforced within the system. This sort of bias becomes “systemic”.

The risk is that we make far more serious and less obvious biases systemic too.